I usually write articles when something frustrates me – in this case the behavior of VMware ESX SATA Controller ordering, which led to a couple of failing Synology DSM 6.1.7 to 6.2.X( X>0) which require SATA, instead of SCSI Controller (which used to be working up to DSM 6.2.0 in my case, and most probably in yours also).

Consider the following scenario:

- DSM 6.1.7 – up and running

- VMXNET3 Network Adapter

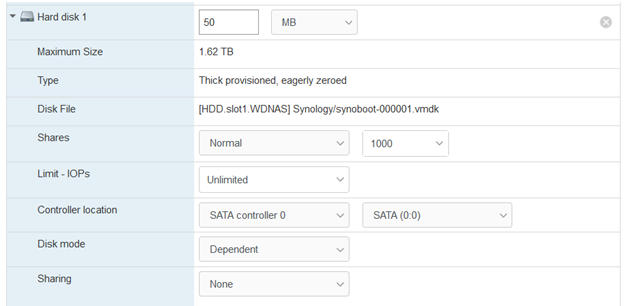

- Jun’s loader 1.02b DS3615xs – attached as SATA (0:0) (SATA Controller 0, Target 0)

- VMware Paravirtual Storage Controller – for data disks, attached as SCSI (0:X) (SCSI Controller 0)

We want an Upgrade to DSM 6.2.2 which involves several steps (not going into details here – you can google them):

- I somehow prefer going through 6.2.0 first (DSM_DS3615xs_23739.pat) since it’s the last DSM working with SCSI Controller

- Use the newer Jun’s loader 1.03b https://xpenology.club/downloads/ – Replace synoboot.img in your datastore

- Replace the VMXNET3 Network Adapter with E1000E. Take care if you’ve used static MAC Addresses!

- Run a manual update using DSM_DS3615xs_23739.pat

- Checkpoint 1: If everything is fine your Synology VM shall boot the new DSM 6.2 and work with your existing SCSI Controller!

- Change the SCSI to SATA Controller – It’s usually relatively straightforward:

- Add the new “SATA Controller 1” (remember synoboot is at SATA Controller 0)

- Re-attach the disks from the SCSI Controller to SATA Controller 1. Use same disk orderering if you’d like – SCSI (0:0)–>SATA (1:0) ; SCSI (0:1) –>SATA (1:1), etc..

- Remove the SCSI Controller

- CheckPoint 2: Check if DSM 6.2 boots – here comes the interesting part – sometimes Synology boots in “recovery/installation” mode. In my case I got 1 successful replacement in ESX 6.0 and 2 failures – with ESX6.0 and ESX6.7U3 (so not an ESX version specific issue)

TLDR – VMware sometimes mix the SATA Controllers order!

The expected behavior is SATA Controller 0 to be first (and our boot drive goes there) and SATA Controller 1 to be second. VMware replaced the order however! Why – haven’t dig into the details of the VMware order, but I wanted to understand why it messes up DSM.

First of all, how to recognize easily that ordering is messed up:

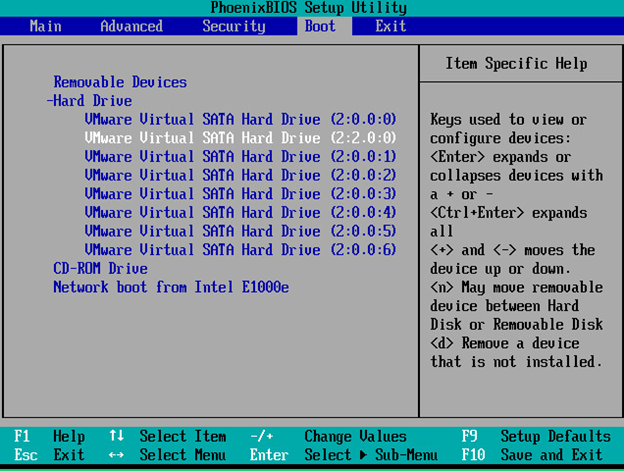

- Look at VM BIOS – Our boot disk is attached at SATA (0:0), however in BIOS it is at host 2 – 2:2.0:0. Look at the 7 additional data disks attached to host 1 (2:0.0.X), despite in VMware they are attached to SATA Controller 1 (SATA 1:X)

- Go to the synology manual install step – don’t install, but take a look at the warning synology raises about overwriting your data. If you see only a single disk (in my case it was disk “2“) – then it’s messed up since you are seeing the boot disk.

Then what’s the impact of this controller order – well it seems its in the specifics of the synology boot loader, which expects:

- Only 1 disk on the first controller for boot disk (it hides the remaining using sata_args – SataPortMap=1)

set sata_args=’sata_uid=1 sata_pcislot=5 synoboot_satadom=1 DiskIdxMap=0C SataPortMap=1 SasIdxMap=0′

Take a look at https://gugucomputing.wordpress.com/2018/11/11/experiment-on-sata_args-in-grub-cfg/ for a good explanation of sata_args

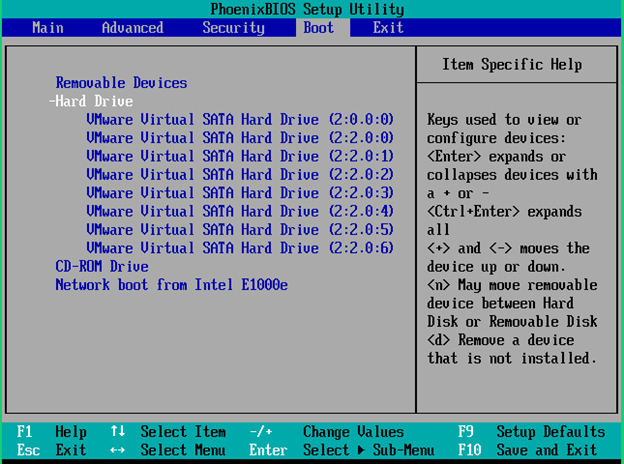

The solution – well – simply re-attach the disks to the other controller. For example the boot disk SATA (0:0) becomes SATA (1:0), DATA disks SATA (1:X) becomes SATA(0:X)

Here’s how they look like in BIOS after controller swap (Note the boot disk is at 2:0.0:0):

- Checkpoint 3: DSM 6.2 now boots using correctly ordered SATA Controllers

- Finally you can install DSM 6.2.2 (DSM_DS3615xs_24922.pat), which installed successfully in my 3 environments after I got the right VMware VM “hardware” configured

- Checkpoint 4: DSM 6.2.2 boots successfully

Few side notes:

- in 2 of my upgrades, DSM lost its static IP and had to be reconfigured (use find.synology.com in such case)

- in 2 of my upgrades, DSM system partition got “corrupted” and the underlying /dev/md0 array had to be rebuilt.